Revolutionizing Fact-Checking: How AI's SAFE System Surpasses Human Accuracy at Lower Costs

Discover how Google DeepMind's SAFE, an AI-powered fact-checking tool, outperforms human accuracy and reduces costs by 20x. Explore the future of reliable information verification with AI.

Faheem Hassan

3/29/20242 min read

AI Revolutionizes Fact-Checking: DeepMind's SAFE System Outshines Humans at Fraction of the Cost

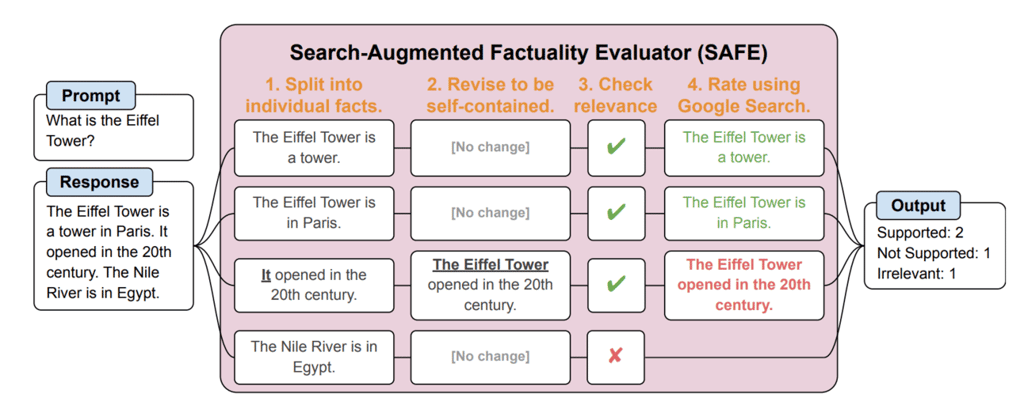

In a groundbreaking study by researchers at the University of California, Berkeley, and Google DeepMind, Artificial Intelligence (AI) has been shown to significantly outperform human annotators in the domain of fact-checking. The study introduces the Search-Augmented Factuality Evaluator (SAFE), an AI tool that not only surpasses human accuracy in verifying factual claims but does so at a cost that is 20 times lower. This development marks a pivotal moment in the utilization of AI for enhancing the reliability and efficiency of information verification processes.

Transition Smoothly to Gemini 1.0 Pro: Google AI's Next-Gen Model Replaces PaLM API

The Power of SAFE: A Closer Look SAFE represents a novel approach in the realm of fact-checking. By breaking down text responses into individual facts and conducting thorough Google searches to verify each claim, SAFE introduces a level of precision and efficiency previously unattainable. This method allows for a comprehensive assessment of the accuracy of factual statements, setting a new standard in the field.

Comparative Accuracy and Cost Efficiency The study's findings are compelling, with SAFE agreeing with human fact ratings 72% of the time. However, the real testament to SAFE's superiority comes into play when disagreements arise. In instances of conflict between SAFE's assessments and human ratings, SAFE was found to be correct 76% of the time, vastly outperforming humans, who were correct only 19% of the time. Moreover, the cost-effectiveness of employing SAFE for fact-checking tasks is undeniable, being 20 times less expensive than the cost of human fact-checkers.

Implications for Large Language Models (LLMs) These findings have profound implications for the future of Large Language Models (LLMs) and their application in real-world scenarios. The ability of LLMs equipped with internet access to perform accurate, automated fact-checking opens up new avenues for their use, particularly in fields where factual accuracy is paramount. This could revolutionize the way information is verified, ensuring that reliable and accurate data is more accessible and cost-effective than ever before.

The advent of AI tools like SAFE, capable of outperforming humans in fact-checking tasks, signifies a major leap forward in the quest for truth and accuracy in the digital age. With the potential to dramatically reduce the costs associated with verifying information, while simultaneously increasing accuracy, SAFE offers a glimpse into a future where AI plays a central role in upholding the integrity of information. As technology continues to evolve, the possibilities for its application in enhancing factual accuracy and reliability are boundless, marking a new era in the fight against misinformation.

Embrace the future of fact-checking by staying informed about the latest developments in AI and its applications in information verification. Trust in the power of technology like SAFE to transform the landscape of fact-checking, making it more reliable, efficient, and accessible for all.